|

|||||||||||

|

| Today's Chemist at Work | E-Mail Us | Electronic Readers Service |

|||||||||||

Introduction Whether the changes to the pharmaceutical industry and the world in the 1980s will prove most notable for the rise of and reaction to a new disease, AIDS, or the flowering of entrepreneurial biotechnology and genetic engineering, it is too soon to say. These changes—along with advances in immunology, automation, and computers, the development of new paradigms of cardiovascular and other diseases, and restructured social mores—all competed for attention in the transformational 1980s. AIDS:

A new plague

By the end of 1981, PCF and KS were recognized as harbingers of a new and deadly disease. The disease was initially called Gay Related Immune Deficiency. Within a year, similar symptoms appeared in other demographic groups, primarily hemophiliacs and users of intravenous drugs. The CDC renamed the disease Acquired Immune Deficiency Syndrome (AIDS). By the end of 1983, the CDC had recorded some 3000 cases of this new plague. The prospects for AIDS patients were not good: almost half had already died. AIDS did not follow normal patterns of disease and infection. It produced no visible symptoms—at least not until the advanced stages of infection. Instead of triggering an immune response, it insidiously destroyed the body’s own mechanisms for fighting off infection. People stricken with the syndrome died of a host of opportunistic infections such as rare viruses, fungal infections, and cancers. When the disease began to appear among heterosexuals, panic and fear increased. Physicians and scientists eventually mitigated some of the hysteria when they were able to explain the methods of transmission. As AIDS was studied, it became clear that the disease was spread through intimate contact such as sex and sharing syringes, as well as transfusions and other exposure to contaminated blood. It could not be spread through casual contact such as shaking hands, coughing, or sneezing. In 1984, Robert Gallo of the National Cancer Institute (NCI) and Luc Montagnier of the Institut Pasteur proved that AIDS was caused by a virus. There is still a controversy over priority of discovery. However, knowledge of the disease’s cause did not mean readiness to combat the plague. Homophobia and racism, combined with nationalism and fears of creating panic in the blood supply market, contributed to deadly delays before action was taken by any government. The relatively low number of sufferers skyrocketed around the world and created an uncontrollable epidemic. Immunology

comes of age

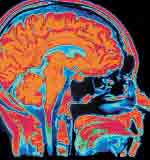

Early efforts to study immunology were aimed at understanding the structure and function of the immune system, but some scientists looked to immunology to try to understand diseases that lacked a clear outside agent. In some diseases, some part of the body appears to be attacked not by an infectious agent but by the immune system. Physicians and researchers wondered if the immune system could cause, as well as defend against, disease. By the mid-1980s, it was clear that numerous diseases, including lupus and rheumatoid arthritis, were connected to an immune system malfunction. These were called “autoimmune diseases” because they were caused by a patient’s own immune system. Late in 1983, juvenile-onset diabetes was shown to be an autoimmune disease in which the body’s immune system attacks insulin-producing cells in the pancreas. Allergies were also linked to overreactions of the immune system. By 1984, researchers had discovered an important piece of the puzzle of immune system functioning. Professor Susumu Tonegawa and his colleagues discovered how the immune system recognizes “self” versus “not-self”—a key to immune system function. Tonegawa elucidated the complete structure of the cellular T cell receptor and the genetics governing its production. It was already known that T cells were the keystone of the entire immune system. Not only do they recognize self and not-self and so determine what the immune system will attack, they also regulate the production of B cells, which produce antibodies. Immunologists regarded this as a major breakthrough, in large part because the human immunodeficiency virus (HIV) that causes AIDS was known to attack T cells. The T cell response is also implicated in other autoimmune diseases and many cancers in which T cells fail to recognize not-self cells. The question remained, however, how the body could possibly contain enough genes to account for the bewildering number of immune responses. In 1987, for the third time in the decade, the Nobel Prize in Physiology or Medicine went to scientists working on the immune system. As Tonegawa had demonstrated in 1976, the immune system can produce an almost infinite number of responses, each of which is tailored to suit a specific invader. Tonegawa showed that rather than containing a vast array of genes for every possible pathogen, a few genetic elements reshuffled themselves. Thus a small amount of genetic information could account for many antibodies. The immune system relies on the interaction of numerous kinds of cells circulating throughout the body. Unfortunately, AIDS was known to target those very cells. There are two principal types of cells, B cells and T cells. T cells, sometimes called “helper” T cells, direct the production of B cells, an immune response targeted to a single type of biological or chemical invader. There are also “suppressor” cells to keep the immune response in check. In healthy individuals, helpers outnumber suppressors by about two to one. In immunocompromised individuals, however, the T cells are exceedingly low and, accordingly, the number of suppressors extremely high. This imbalance appears capable of shutting down the body’s immune response, leaving it vulnerable to infections a healthy body wards off with ease. Eventually, scientists understood the precise mechanism of this process. Even before 1983, when the viral cause of the disease was determined, the first diagnostic tests were developed to detect antibodies related to the disease. Initially, because people at risk for AIDS were statistically associated with hepatitis, scientists used the hepatitis core antibody test to identify people with hepatitis, and therefore, at risk for AIDS. By 1985, a diagnostic method was specifically designed to detect antibodies produced against the low titer HIV itself. Diagnosing infected individuals and protecting the valuable world blood supply spurred the diagnosis effort.

By the late 1980s, under the impetus and fear associated with AIDS, both immunology and virology received huge increases in research funding, especially from the U.S. government. Pharmaceutical

technology and AIDS

In 1987, the ultimate approval of AZT as an antiviral treatment for AIDS was the result of both the hard technology of the laboratory and the soft technologies of personal outrage and determination (and deft use of the 1983 Orphan Drug Act). Initially, the discovery of the viral nature of AIDS resulted in little, if any, R&D in corporate circles. The number of infected people was considered too small to justify the cost of new drug development, and most scientists thought retroviruses were untreatable. However, Sam Broder, a physician and researcher at the NCI, thought differently. As clinical director of the NCI’s 1984 Special Task Force on AIDS, Broder was determined to do something. Needing a larger lab, Broder went to the pharmaceutical industry for support. As Broder canvassed the drug industry, he promised to test potentially useful compounds in NCI labs if the companies would commit to develop and market drugs that showed potential. One of the companies he approached was Burroughs Wellcome, the American subsidiary of the British firm Wellcome PLC. Burroughs Wellcome had worked on nucleoside analogues, a class of antivirals that Broder thought might work against HIV. Burroughs Wellcome had successfully brought to market an antiherpes drug, acyclovir. Although many companies were reluctant to work on viral agents because of the health hazards to researchers, Broder persevered. Finally, Burroughs Wellcome and 50 other companies began to ship chemicals to the NCI labs for testing. Each sample was coded with a letter to protect its identity and to protect each company’s rights to its compounds. In early 1985, Broder and his team found a compound that appeared to block the spread of HIV in vitro. Coded Sample S, it was AZT sent by Burroughs Wellcome. There is a long road between in vitro efficacy and shipping a drug to pharmacies—a road dominated by the laborious approval process of the FDA. The agency’s mission is to keep dangerous drugs away from the American public, and after the thalidomide scare of the late 1950s and early 1960s, the FDA clung tenaciously to its policies of caution and stringent testing. However, with the advent of AIDS, many people began to question that caution. AZT was risky. It could be toxic to bone marrow and cause other less drastic side effects such as sleeplessness, headaches, nausea, and muscular pain. Even though the FDA advocated the right of patients to knowingly take experimental drugs, it was extremely reluctant to approve AZT. Calls were heard to reform or liberalize the approval process, and a report issued by the General Accounting Office (GAO) claimed that of 209 drugs approved between 1976 and 1985, 102 had caused serious side effects, giving lie to the apparent myth that FDA approval automatically safeguarded the public. The agendas of patient advocates, ideological conservatives who opposed government “intrusion,” and the pharmaceutical industry converged in opposition to the FDA’s caution. But AIDS attracted more than its share of false cures, and the FDA rightly kept such things out of the medical mainstream. Nonetheless, intense public demand (including protests by AIDS activist groups such as act up) and unusually speedy testing brought the drug to the public by the late 1980s. AZT was hard to tolerate and, despite misapprehensions of its critics, it was never thought to be a magic bullet that would cure AIDS. It was a desperate measure in a desperate time that at best slowed the course of the disease. AZT was, however, the first of what came to be a major new class of antiviral drugs. Its approval process also had ramifications. The case of AZT became the tip of the iceberg in a new world where consumer pressures on the FDA, especially from disease advocacy groups and their congressional supporters, would lead to more and rapid approval of experimental drugs for certain conditions. It was a controversial change that in the 1990s would create more interest in “alternative medicine,” nutritional supplements, fast-track drugs, and attempts to further weaken the FDA’s role in the name of both consumer rights and free enterprise. Computers

and pharmaceuticals

Unfortunately for the industry, its shareholders, and sick people who might have been helped by these elegantly tailored compounds, it did not turn out that way. By the end of the decade, it was clear that whatever economic usefulness there was in molecular biology developments (via increased efficiency of chemical drugs), such developments did not yet enable the manufacturing of complex biologically derived drugs. So while knowledge of protein structure has supplied useful models for cell receptors—such as the CD4 receptors on T cells, to which drugs might bind—it did not produce genetic “wonder drugs.” Concomitant with this interest in molecular biology and genetic engineering was the development of a new way of conceiving drug R&D: a new soft technology of innovation. Throughout the history of the pharmaceutical industry, discovering new pharmacologically active compounds depended on a “try and see” empirical approach. Chemicals were tested for efficacy in what was called “random screening” or “chemical roulette,” names that testify to the haphazard and chancy nature of this drug discovery approach. The rise of molecular biology, with its promise of genetic engineering, fostered a new way of looking at drug design. Instead of an empirical “try and see” method, pharmaceutical designers began to compare knowledge of human physiology and the causes of medical disorders with knowledge of drugs and their methods of physiological action to conceptualize the right molecules. This ideal design is then handed over to research chemists in the laboratory, who search for a close match. In this quest, the biological model of cell receptor and biologically active molecule serve as a guide. The role of hard technologies in biologically based drug research cannot be overstated. In fact, important drug discoveries owe a considerable amount to concomitant technological developments, particularly in imaging technology and computers. X-ray crystallography, scanning electron microscopy, NMR, and laser and magnetic- and optical-based imaging techniques allow the visualization of atoms within a molecule. This capability is crucial, as it is precisely this three-dimensional arrangement that gives matter its chemically and biologically useful qualities. Computers were of particular use in this brave new world of drug design, and their power and capabilities increased dramatically during the 1980s. The increased computational power of computers enabled researchers to work through the complex mathematics that describe the molecular structure of idealized drugs. Drug designers, in turn, could use the increasingly powerful imaging capabilities of computers to convert mathematical protein models into three-dimensional images. Gone were the days when modelers used sticks, balls, and wire to create models of limited scale and complexity. In the 1980s, they used computers to transform mathematical equations into interactive, virtual pictures of elegant new models made up of thousands of different atoms. Since the 1970s, the pharmaceutical industry had been using computers to design drugs to match receptors, and it was one of the first industries to harness the steadily increasing power of computers to design molecules. Software applications to simulate drugs first became popular in the 1980s, as did genetics-based algorithms and fuzzy logic. Research on genetic algorithms began in the 1970s and continues today. Although not popular until the early 1990s, genetic algorithms allow drug designers to “evolve” a best fit to a target sequence through successive generations until a fit or solution is found. Algorithms have been used to predict physiological properties and bioactivity. Fuzzy logic, which formalizes imprecise concepts by defining degrees of truth or falsehood, has proven useful in modeling pharmacological action, protein structure, and receptors. The

business of biotechnology

Eli Lilly was one of the big pharma companies that formed early partnerships with smaller biotech firms. Beginning in the 1970s, Lilly was one of the first drug companies to enter into biotechnology research. By the mid-1980s, Lilly had already put two biotechnology-based drugs into production: insulin and human growth hormone. Lilly produced human insulin through recombinant DNA techniques and marketed it, beginning in 1982, as Humulin. The human genes responsible for producing insulin were grafted into bacterial cells through a technique first developed in the production of interferon in the 1970s. Insulin, produced by the bacteria, was then purified using monoclonal antibodies. Diabetics no longer had to take insulin isolated from pigs.

By 1987, Lilly ranked second among all institutions (including universities) and first among companies (including both large drug manufacturers and small biotechnology companies) in U.S. patents for genetically engineered drugs. By the late 1980s, Lilly recognized the link between genetics, modeling, and computational power and, already well invested in computer hardware, the company moved to install a supercomputer. In 1988, typical of many of the big pharma companies, Lilly formed a partnership with a small biotechnology company: Agouron Pharmaceuticals, a company that specialized in three-dimensional computerized drug design. The partnership gave Lilly expertise in an area it was already interested in, as well as manufacturing and marketing rights to new products, while Agouron gained a stable source of funding. Such partnerships united small firms that had narrow but potentially lucrative specializations with larger companies that already had development and marketing structures in place. Other partnerships between small and large firms allowed large drug companies to “catch up” in a part of the industry in which they were not strongly represented. This commercialization of drug discovery allowed companies to apply the results of biotechnology and genetic engineering on an increasing scale in the late 1980s, a process that continues. The

rise of drug resistance

Tuberculosis in particular experienced a resurgence. In the mid-1980s, the worldwide decline in tuberculosis cases leveled off and then began to rise. New cases of tuberculosis were highest in less developed countries, and immigration was blamed for the increased number of cases in developed countries. (However, throughout the 20th century in the United States, tuberculosis was a constant and continued health problem for senior citizens, Native Americans, and the urban and rural poor. In 1981, an estimated 1 billion people—one-third of the world’s population—were infected. So thinking about tuberculosis in terms of a returned epidemic obscures the unabated high incidence of the disease worldwide over the preceding decades.)

The most troubling aspect of the “reappearance” of tuberculosis was its resistance to not just one or two drugs, but to multiple drugs. Multidrug resistance stemmed from several sources. Every use of an antibiotic against a microorganism is an incidence of natural selection in action—an evolutionary version of the Red Queen’s Race, when you have to run as fast as you can just to stay in place. Using an antibiotic kills susceptible organisms. Yet mutant organisms are present in every population. If even a single pathogenic organism survives, it can multiply freely. Agricultural and medical practices have contributed to resistant strains of various organisms. In agriculture, animal feed is regularly and widely supplemented with antibiotics in subtherapeutic doses. In medical practice, there has been widespread indiscriminate and inappropriate use of antibiotics to the degree that hospitals have become reservoirs of resistant organisms. Some tuberculosis patients unwittingly fostered multidrug-resistant tuberculosis strains by failing to comply with the admittedly lengthy, but required, drug treatment regimen. In this context, developing new and presumably more powerful drugs and technologies became even more important. One such technology was combinatorial chemistry, a nascent science at the end of the 1980s. Combinatorial chemistry sought to find new drugs by, in essence, mixing and matching appropriate starter compounds and reagents and then assessing them for suitability. Computers were increasingly important as the approach matured. Specialized software was developed that could not only select appropriate chemicals but also sort through the potentially awesome number of potential drugs. Prevention,

the best cure

While new drugs were being designed, medicine focused on preventing disease rather than simply trying to restore some facsimile of health after it had developed. The link between exercise, diet, and health dates back 4000 years or more. More recently, medical texts from the 18th and 19th centuries noted that active patients were healthier patients. In the early 1900s, eminent physician Sir William Osler characterized heart disease as rare. By the 1980s, in many Western countries some 30% of all deaths were attributed to heart disease, and for every two people who died from a heart attack, another three suffered one but survived. During this decade, scientists finally made a definitive connection between heart disease and diet, cholesterol, and exercise levels. So what prompted this change in perspective? In part, the answer lies with the spread of managed care. Managed care started in the United States before World War II, when Kaiser-Permanente got its start. Health maintenance organizations (HMOs), of which Kaiser was and is the archetypal representative and which were and are controversial, spread during the 1980s as part of an effort to contain rising medical costs. One way to keep costs down was to prevent people from becoming ill in the first place. But another reason for the growth of managed care had to do with a profound shift in the way that diseases, particularly diseases of lifestyle, were approached. Coronary heart disease has long been considered the emblematic disease of lifestyle. During the 1980s, a causal model of heart disease that had been around since the 1950s suddenly became the dominant, if not the only, paradigm for heart disease. This was the “risk factor approach.” This view of heart disease—its origins, its outcomes, its causes—is a set of unquestioned and unstated assumptions about how individuals contribute to disease. Drawing from population studies about the relationship between heart disease and individual diet, genetic heritage, and habits, as much as from biochemical and physiological causes of atherosclerosis, the risk factor approach tended to be a more holistic approach. Whereas the older view of disease prevention focused on identifying those who either did not know they were affected or who had not sought medical attention (for instance, tuberculosis patients earlier in the century), this new conceptual technology aimed to identify individuals who were at risk for a disease. According to the logic of this approach, everyone was potentially at risk for something, which provided a rationale for population screening. It was a new way to understand individual responsibility for and contribution to disease.

Focusing on risk factors reflected a cultural preoccupation with the individual and the notion that individuals were responsible for their own health and illness. As part of a wave of new conservatism against the earlier paternalism of the Great Society, accepting the risk factor approach implied that social contributions to disease, such as the machinations of the tobacco and food industries, poverty, and work-related sedentary lifestyles, were not to blame for illness. Individuals were considered responsible for choices and behavior that ran counter to known risk factors. While heart disease became a key focus of the 1980s, other disorders, including breast, prostate, and colon cancer, were ultimately subsumed by this risk factor approach. AIDS, the ultimate risk factor disease, became the epitome of this approach. Debates raged about the disease and related issues of homosexuality, condoms, abstinence, and needle exchange and tore at the fabric of society as the 1990s dawned. Conclusion

|

|||||||||||

|

© 2000 American Chemical Society

|

|||||||||||